Topics

62. Variable Costing vs Full Costing for the Profit Center Assessment

| $ (K) | Alfa Inc, | Division Smart TV | Division PC |

| Revenues | 4,000 | 2,400 | 1,600 |

| Variable Costs | 1,600 | 1,000 | 600 |

| Contribution Margin | 2,400 | 1,400 | 1,000 |

| Controllable Fixed Costs | 450 | 290 | 160 |

| Controllable Margin | 1,950 | 1,110 | 840 |

| Noncontrollable Fixed Costs | 1,100 | 700 | 400 |

| Contribution By Profit Center | 850 | 410 | 440 |

| Costs at Company Level | 300 | ||

| Operating Income | 550 |

The concept we make use of in the above exhibit is the controllabity of costs within one year or less.

In the controllable cost category fall the Variable Costs and some kinds of Fixed Costs such as time indirect labor contracts, research projects, sales promotion, etc.

The rest of Fixed Costs belong to Noncontrollable ones.

The profit resulting by subtracting all these costs from Revenues is the Contribution by Profit Center that is how much the basis is on which one can assess the performances of the Profit Centers in the Short Term (Controllable Margin) and also in the Long Term (Contribution By Profit Center)

This Income Statement may even be improved in order to make it more fair how to assess the Profit Center Managers but this will be the core of a future publication.

61. The New Evolution of the Performance Indicators

In these times of great volatility, uncertainty and first of all disruptions in the normal workflow of all the business departments, it becomes clear enough how many of the performace indicators put in place by an organization should enhanced to the upper level.

What do we mean by upper level?

In order to see the upper level we have to look at the disruptions happening now.

The main issues of this period due to the Covid pandemic and the war in Ukraine concern the highest prices of the materials, of gas and energy, strong delays in the delivery of the same materials, change of the actors throughout the supply chain and last but not least the change of the businesses to new and most profitable market segments.

As a result all the projections based on the old indicators, both financial and non-financial, are intended to fail and lack in usefulness for the business.

Then the upper level is to be able to assess he responsiveness capability of the organization to the unpredictability, by using new indicators or by combining those already in place in a way that enable the managers to monitor the strength of the respective dpts to respond to the potential changes and to set all the tools needed to stay "afloat.

Of course structural improvements concerning the business processes are possible but these have to do with management decisions on the organization that produce their effects in the medium-long term, by setting the business skills to face the potential unpredictability, and that are thought and realized based just on the results of the most appropriate responsiveness indicators.

For instance, a good decent process management system, that pays attention to activities that would interrupt the user's work flow or would delay it, would be a good lever of improvement.

Waiting time and other forms of idle time should be spotted and eventually reduced or eliminated or transformed.

What do I mean by transformed?

If we change point of view waiting time for instance is a significant indicator of the improvement margin hidden into an organization.

That should be seen not just as a kind of waste of the usual tasks but also as an opportunity to carry out new ones and to find solutions.

In such a case it can become a business value-added Activity.

As to the subject of this dissertation letting the workers do something productive while the workflow is suspended for instance, might also help the business to face even better the extraordinary problems associated with these times.

Nonetheless all these "adjustments", and many more, can be done only if some responsiveness indicators, timely and suitable for their work, are put in place, good for alerting the management on the emerging issues.

I am talking about all kinds of indicators, financial and nonfinancial, global and at department level.

Let's give an example of financial ones that consists of a comparison of two different components at a defined time interval and of the ratio between them.

Financial Reaction Speed

Total Contribution Margin Variance/ Total Variable Cost Variance in a given time interval yields an important indication about the financial value of the reaction speed of a business in these troubled times disrupted by hyperinflation and change in the actors of the supply chain.

This indicator weighs the capacity of the business to be profitable and gives it the chance to monitor the value of its choices even in the short term.

An indicator even more timely for the manufacturing company is the same ratio with the Throughput Margin.

Throughput Margin Variance/ Direct Material Cost Variance

This indicator may be relevant with reference both to the total amount and to the amounts concerning just the single products.

As a matter of fact the Variable Overheads are counted in their actual amount at the end of an accounting period and the respective close procedures while the direct materials and their associated costs are recorded and attributed to their objects "immediately" when they are consumed and this makes the alarm of the throughput use in the ratio most timely.

As we now the financial results are the measurement of a process already accomplished while other elements may reveal in advance some issues before they take on a financial value.

We are talking about of the nonfinancial indicators.

Nonfinancial indicators are becoming more and more important with reference to all the business' departments when facing the emerging continuous disruptions just as it's happening today.

Moreover new KPIs or new combinations of the existing ones should be found in order be able to face the problems and meet the customers' needs in a timely manner and more effectively than the competitors do.

Having said that, we won't list the ratios or other indicators dpt by dpt since they could be different, depending on the industry and company.

It would be very advisable to find them within a firm trough the work of multidisciplinary teams where the c-suite people work together with the different functions in order to get what is most appropriate.

60. Management Accountant Profession in the Spotlight - The Last Song

How many times I hear of CFOs accepting the proposal of a Costing software without interacting with a skilled Management Accountant!!

The attention is focused just on the speed of reporting and the easiness of the respective procedures together with the number of reports.

No one considering the fact the appropriatness of those reports may be lacking!!!

Since this time is not the first, but it will be the last one, I try to exhamine this root cause for every kind of judjement error, I will make a shortlist of the factors to be taken into account when choosing a costing system that even the best software vendor cannot "impose"

a) Job Costing, Process Costing, Operation Costing.

b) Standard, Normal, Actual.

c) Volume-Based, Activity-Based.

d) Business Rate, Department Rate.

e) Theoretical, Practical, Normal, Budgeted Capacity-Based method.

f) Empirical, Heuristic, Mixed leading approach

Having seen that, a short focus on every of these elements is needed and in the end it should be clear enough how the choice of a costing system should be shared with the "best" of the business management accountants that combines skills with technical knowledge and familiarity with business processes.

a) Lets'get started with the cost gathering method that leads to the distinction among Job Costing, Process Costing, Operation Costing.

Let's suppose the Outputs of your processes are very distinguishable from each other in a sense that they can vary in value and features according to the kind and the amount of resources employed for the make of the product or the delivery of the service.

In this case all the costs incurred (Direct materials, Direct Labor and Overhead Costs) may be assigned to a specific product/service, order, batche of products and project.

In other words Job Costing is the cost gathering system to be used.

Instead, if you work at a mass production company whose products are very similar and homogeneous so that they requires similar processes and consumptions of resources, the most appropriate system is without a doubt the Process Costing that first of all "pays attention" to the costs incurred in the processes into the departments and at a second step determines the product/service unit cost.

It is not over!

When just one category of costs, usually the direct materials, may be varying from one output unit to another whilst the others are similar in type and amount, then we may use the Operation Costing.

What I just described would suffice to make it clear how the Management Accountant should be involved in making such a decision but for the most skeptical I want to make my dissertation go all the way!!

b) The kind of measurement impacts also the choice of the costing system and leads to the distinction of Actual, Standard and Normal Costing.

The first provides the costing of the specific objects by taking into account the real costs incurred throughout the reference period (Overheads are generally known only at its end).

When some sort of urgency is present in the information needs of the firm, just as it happens in many cases for the pricing, that moreover cannot be subject to recurrent monthly fluctuations due to the change in the Unit Fixed Costs caused by the variation in Monthly Output volume, the management can opt for the Normal Costing that attributes a predetermined Overhead amount to each product unit based on a predetermined rate while assigning the actual amount of the other cost elements (direct materials in primis).

This approach is very appropriate of when the strategy is based on the Cost leadership in a very dynamic and competitive environment where the costs of some elements (direct materials) are fluctuating and at the same time the timeliness of the pricing is a critical succes factor.

We go through this distinction by citing the Standard Costing adopted when first of all a sort of homogeneousity is present in all the bisiness processes over time, due for instance to a low customization, and it's possible standardize the product/service costs in all the elements considered without waiting for the costing at end of the accounting period to have a reliable determination.

c) The Overhead Costs are attributed to the cost objects by taking into account the most reliable driver whose change in the quantity employed determines a specific variation in the Overhead amount.

When this driver expresses the amount of the Output (usually Labor Hours, Machine Hours) the method is called Volume-Based; when it is the result from each of the different "indirect" activities carried out per cost object (product, service, customer...), we have an Activity-Based approach.

It goes without saying that when the business processes are homogeneous, that many times means to have similar products, kinds of customers, projects, the Volume-Based approach fits well that work environment; in the opposite case when the indirect activities and the resources needed to carry them out differ in nature since the products/services/projects/customers are rather different, the Activity-Based approach is the best possible method.

The literature about ABC is rich and I won't focus on its operating way since the goal of this article is highlighting the general skills of a management accountant and the need for his partecipation in some decision making as a partner and not just as an executor.

d) Another important issue concerning the similarity or the difference of the processes calls for the comparison amongst the work done in the business deparments that should bring about a diverse way to determine the Overhead Allocation Rate when using the Normal Costing or also the Standard Costing at the time of the Overhead Variance Analysis.

In order to be more clear, here is an example with reference to a Normal Costing System based on a Volume-Based approach.

We may apply two methods to allocate the Overhead Costs to a Product in case of multiple departments inside a firm:

1) General Allocation

Total Budget Overheads = $ 8,000,000

Budget Overheads Dpt A: $ 2,700,000

Budget Overheads Dpt B: $ 2,400,000

Budget Overheads Dpt C: $ 2,900,000

Total Budget Labor Hours (allocation driver): 192,000

Budget Labour Hours for Dpt A: 72,000

Budget Labour Hours for Dpt B: 55,000

Budget Labour Hours for Dpt C: 65,000

Hourly Overhead rate: 8,000,000/192,000 = $ 41,66

Let's suppose that the Actual Labor Hours for the Product A are the following:

Total Actual Labour Hours: 2,200

Actual Labor Hours on Prod. A for Dpt A: 1,000

Actual Labor Hours on Prord. A for Dpt B: 700

Actual Labor Hours on Prod. A for Dpt C: 500

Overheads Allocated to Prod. A = 41,666 X 2,200 = $ 91,665

1) Specific Allocation/Single Departments

Here is the second Overhead allocation way to Product A by taking into account the Input values above written.

Hourly Overhead rate per Dpt

Hourly Overhead rate Dpt A: $2,700,000/72,000 = $ 37.5

Hourly Overhead rate Dpt B: $ 2,400,000/55,000 = $ 43.64

Hourly Overhead rate Dpt C: $ 2,900,000/ 65,000 = $ 44.61

Overheads Allocated to Prod. A from each Dpt

From Dpt A = $ 37.5 x Actual LB Hrs Dpt A = $ 37.5 X 1,000 = $ 37,500

From Dpt B = $ 37.5 x Actual LB Hrs Dpt B = $ 43.64 X 700 = $ 30,548

From Dpt C = $ 37.5 x Actual LB Hrs Dpt C = $ 44.61 X 500 = $ 22,305

Overheads Allocated to Prod. A = $ 90,353

As we can see there is a difference being worth $ 1,312.

In this case we might judge it as not material and prefer to apply the General Allocation method more easy and immediate to achieve but when dpt processes are so different so that may absorb different resource quantities and kind of resources (in some cases managers refer to different cost drivers), the most natural choice is to apply different dpt rates in order to have the product costing as accurate as possible.

e) Another issue is the choice of the basis to which spread the Fixed Overheads in order to achieve the respective Fixed Overhead Allocation Rate to apply to each object and getting its costing.

As we saw in the articles dedicated to the Output Capacity of a business unit, the solutions are four:

Theoretical, Practical, Normal and Budgeted Capacity.

The definitions of these kinds are well-known and I won't repeat them here, inviting you to read the above mentioned articles on this webpage.

It's clear that the Unit Cost resulting from this choice decreases when shifting from Theoretical up to Budgeted or Normal Capacity (usually Average Budgeted Capacity over 3 years).

The consequences from it impact the Product-pricing decisions as well as the performance evaluation aspects.

f) The last aspect I want to take into account is the leading approach that causes the business managers to make a decision about a favorite costing system.

I am talking about the importance level attributed to the empirical research (if known) that is the findings of the researches made on the accuracy of a costing system, by taking advantage also out of statistical techniques.

If we choose to adopt an empirical approach for instance, we will find that the researches show that Volume-Based might work when resource costs are concentrated and that ABC systems are likely to have the greatest benefit when resource costs are diversified.

Another finding is that when forming pools of costs (each pool with one cost driver different from the other pools) in order to achieve an Overhead Allocation Rate, a low number of cost pools seems to be reasonable because ther is an acceptable tradeoff between the costs of adding more pools and benefits of system accuracy.

Other findings have been made but I won't list them in accordance with the purpose of this article I repeat once more: highlighting how the strategic partecipation of a skilled management accountant to the choice and to the settings of a costing system is "compulsory" and not only advisable.

59. Digital Assets and Customer Data: How to Evaluate Them as Fixed Assets

May 5th 2022

It goes without saying that data is the most important value source nowadays and that it is good for the vast majority of industries.

Without a doubt it will be from now on even more the catalyst for the future economic growth, which all business policies and in particular the marketing ones are going to rely on to increase the bottom line.

That means that data is to be considered like an Asset that produces value through its use and reuse over time and is not consumed immediately like a Raw Material unit

What do I mean by use and reuse in practical terms?

Please think of how a business handles its data:

It not only ingests, stores, maintains but also secures the data.

Even more its dedicated specialists acquire, aggregate, prepare, clean, structure and (why not) enrich, transform and map raw data in order to achieve a suitable format for a better decision making.

In the end, data is made usable to the downstream data consumers within the same business.

The particularity is these processes are repeated over time by using often the same data, making it different from what a business does with a consumable resource; hence the explanation data is to be considered like a Fixed Asset.

What about the determination of its financial value?

So far all the value attributed to data derived just from the expenses incurred to acquire a given amount of data to be "elaborated and interpreted" in order to better see and match the customer requirements.

We all bring in mind for instance how much a market survey costs in consideration of the related resources deployed to achieve that data and draw the correct insights.

In other words the actual value of data is limited to the respective outlay we can find in a Period's Income Statement of a business into different accounting items.

But this way doesn't yield the real financial weight of data for the business and buries the concept of Intangibles that in my opinion concerns also data and its continuous use thats makes it just like a Fixed Asset.

As a result of this consideration, the issue coming into play in the application of data to the business worls is to assign a quantifiable value to it.

How to proceed? Which approach can we use?

One of these could be to leverage the Economics concepts such as the economic multiplier effect, marginal costs, marginal propensity to save, and marginal propensity to consume and the Marginal Propensity to Reuse.

This way has been conceived by Bill Schmarzo, creator of the Value Engineering methodology and well-known for Schmarzo Economic Digital Asset Valuation Theorem (where business data come in).

Let's go on step by step.

Schmarzo states that data can be reused across an unlimited number of use cases at zero marginal cost by producing an Economic Multiplier Effect.

What is it like?

By quoting Schmarzo's words, it is the ratio of the impact of an incremental increase in investment on the resulting incremental increase in output or value.

Why and how should it impact the finance "world"?

Think, for instance, of ROI in its most used formula Operating Income/Invested Capital; that means that an incremental increase in investment in Data (if considered like a Fixed Asset), through that multiplier effect, causes an incremental increase in in the numerator (Operating Income).

I won't be here reminding everyone of the applications of ROI in the business world; they are known and in some cases have been already dealt with into other articles of this webpage.

But why and how would this incremental value of output happen?

In order to have the Economic Multiplier Effect for data, businesses can rely on two main drivers.

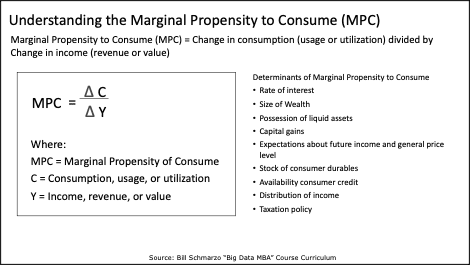

1) Firstly they need to increase the Marginal Propensity to Consume (MPC) for data, that means in other words make it easier for data users to consume data.

What is MPC like?

The Marginal Propensity to Consume is calculated as the change in consumption (usage or utilization) divided by the change in income (revenue or value).

See below the Exhibit 1 illustrated by Schmarzo

About the determinants and other details of MPC, please be patient not to have a full dissertation here because the goal of this article is different as you can easily see and you may refer to the sources that Bill Schmarzo makes available also online.

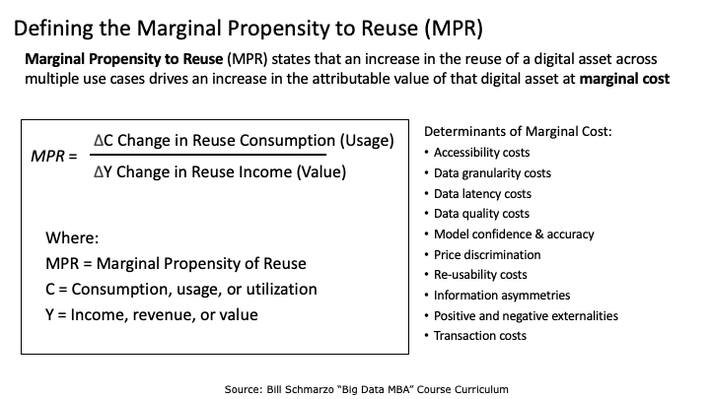

2) The second and most important driver of the Economic Multiplier Effect is the Marginal Propensity to Reuse (MPR).

in order to explain what MPR is like, we start first of all from the Schmarzo Economic Digital Asset Valuation Theorem that states that organizations can take advantage out of the sharing, reuse and continuous refinement of the organization’s data and analytic assets since they produce reduction in marginal costs, growth in marginal value and acceleration in Economic Value at each subsequent business operational case that uses the same data and analytic assets.

Having said that here how Schmarzo describes the MPR.

The Marginal Propensity to Reuse (MPR) states that an increase in the reuse of a data set across multiple use cases drives an increase in the attributable value of that data set at zero marginal cost.

Exhibit 2

We also know from Schmarzo' works that "anything that prevents the reuse and refinement of the data and analytic assets, is destroying the economic potential of data. If there is no sharing and reuse of the data and analytic assets, then the Economic Multiplier Effect cannot take effect."

Bill Schmarzo goes along to elaborate a formula the yields the Economic Value of a Data Set based on concepts I have just illustrated, I invite you to look at in his materials and on which he is still working with the University of San Francisco to take this formula to the next level, as he literally says literally.

After illustrating these Economics concepts, thanking Bill Schmarzo for allowing me to cite his work, that highlight the great "weight" of Data I want to make it clear how in financial terms Data should be considered as a Fixed Asset just since it adds value over time through the continuous use and there is not a total consumption at their first use like it happens instead, for instance, with a Raw Material Unit .

I wish I could show a method of my own conception that returns a value of Data as a Fixed Asset starting from the basics of my job, the Managerial Accounting.

This theory explanation takes as an example the most value-adding data use, that is the use and resue of customer data.

Customer Data Evaluation

First of all data a business owns concerning present customers and potential ones have been taken often by external providers by paying a negotiated price or through market surveys, or the like, made by Sales & Marketing dpt in the execution of their daily tasks.

These costs are recorded into the Period's Income Statement of a business under the account specific of the purchase or hidden into the worked hours of the employees that work on those data.

As a result, to know the value base of customer data to be capitalized we should transfer the cost of the purchase to another account that goes whithin the Asset & Liabilities Statement as an Intangible Fixed Asset.

This step consists of making an accounting entry within a Managerial Accounting system that provides the use of Asset Accounts and not only of Income Accounts, whose exclusive use is typical instead of the most part of Managerial Accounting Systems.

On this occasion, until when this theory will be acknowledged as a General Accepted Accounting Principle, it will be expressed through that specific Accounting system.

Here is an example:

Debit Credit $

Customer Data Capitalized Data Purchased 45,000

Some different kinds of reasoning concern the calculation of the value of the people's work on data that is to made both for the collection of data and for other kind d of work such as entry, cleansing, integration from different sources, refinement, regrouping and of course the use made when getting in touch with clients and potential ones for new deals.

Why are we taking into account this data work?

Since it is intended to create value that means in practical terms to strike a bargain or to enhance, by making the data more significant and consistent, the likelihood to strike it.

If you are not convinced by such a manner please look again at the other kind of clarification by Bill Schmarzo that I quoted in the first part of this article where he shows how use and reuse of Data produce an Economic Multiplier Effect.

Which are the reference elements to evaluate this use and reuse of data?

I prefer making use of a hour-based approach and taking into account just the most important cost category involved as a "tribute" to the principles of clearness and significance: labor costs.

Here is the response:

- The Labor Cost recorded in the Income Statement of the people involved.

- The Total Working Hours of those people throughout the reference period (net of the number of hours not worked on many reasons).

- The resulting Labor Hour Cost.

- The Working Hours spent within the reference period specifically on those kinds of tasks .

For instance, you have not to consider the hours spent on repairing errors on the data concerned.

These elements come into play at the end of a period and the result of some appropriate calculations is an accounting entry to make.

Of course you may make these calculations at the beginning of a period when you are doing an estimate for the future.

Let' go along with an example:

Labour Cost (of the involved dpts) for the period: $ 400,000

Working Hours: 13,600

Labor Hour Cost: $ 400,000/13,600 = $ 29.41

Working Hours on Data = 2,720

Data Work Value = $ 29.41 X 2,720 = $ 79,995

The following step is the record above mentioned that concerns the transfer in the Managerial Accounting system from the Income Statement to the Asset & Liabilities Statement.

Debit Credit $

Customer Data Capitalized Labor Costs 79,995

This kind of evaluation of the Working Hours on use and reuse of Data should be done at the end of each period by taking into account the hours in that specific period and not the cumulated hours overe different years.

At this point the Account "Customer Data Capitalized" adds up to $ 124,995 (45,000 + 79,995).

In consideration that the marginal cost of reusing data is near zero, like Schmarzo tells us, you won't do the Depreciation assessment like you do for the physical assets that cannot be reused across many use cases at near zero marginal cost.

As a matter of fact, there are considerable marginal costs (maintenance costs, utilization costs and other operating costs) associated to reusing a physical asset.

The only kind of "depreciation" that could be done is a devaluation due to an anticipation of an effective cancelation (or proven lack of use) of some data sets held by the business on many reasons such as statutory regulations, change in the market segment and so on.

What about the impact on some important measurement and assessment matters?

If we have a starting Operating Income of $ 600,000 and a "Total Assets"of $ 7,500,000, after these accounting "shifts" the new results will be respectively $ 724,995 for the Operating Income and $ 7,624,995 for the Total Assets.

What does this mean on the Financial Assessment side?

A higher ROI (in its most used formula) with all the consequent different judjments that may derive on the differrent application cases of this indicator.

Initial ROI = 600,000/7,500,000 = 8%

New ROI = 724,995/7,624,995 = 9,5%

The variation in ROI is important in this example and it could be more important, but more realistic, if we abandon the Historical Cost Evaluation Method and move to a "Revaluation Approach".

As a matter of fact the use of Customer Data are intended to generate Revenues that build a margin in on the costs incurred and attributing a different value from the historical one could represent a more correct financial "picture" of this new category of Fixed Asset.

How can we do this?

After carrying out the calculations and the accounting entries just seen, we need to take into account the logic of the financial capitalization where the concepts of Present Value, Interest Rate and Principal and Interest come into play.

I will be more clear by recalling and applying to this case the main concepts:

- The Present Value is the Cost that results from the records you have done so far under the Account "Customer Data Capitalized".

- The Interest Rate could be either prudential (since it would represent the Opportunity Cost), taking the form of WACC, or risky (by charging on the business the prospects about the growth or the decrease of the market segment).

- The Principal and Interest are achieved by applying the compound capitalization formula.

it is advisable, not mandatory, that the reference time for the capitalization should be one year (in this case the capitalization would be equivalent to a simple one). Why?

In consideration that the this operation is to be done on the working hours of every new period at its end, there is a risk to make the work heavy and not very reliable.

In fact if you operate on 2 plus year, for the next periods to be considered you need to take again at the following period-end capitalizations the same data sets also "worked" in the previous periods, already capitalized at their ends, and you will have to update their value through a new interest rate.

Simply put, when considering 2 plus years, the yearly estimation work through the compounding should become heavy.

Moreover the rate at which capitalizing is more reliable when set for the year to come.

Taken for granted this why could a CFO decide to resort to the compounding?

One of the reasons can be found in some regulatory disposition that provides the keeping of some category of data only for a specific number of periods and as a result the manager might like to see their financial value for the whole period and its impact on the financial performance measurement and assessment.

I remind in this regards that the business needs an appropriate operating system able to distinguish th different data sets andthe hours worked on each of them; at the same way I remind all of us of the principle stating that he cost of the information acquisition should be lower than its benefit.

Here is an example of the compound capitalization made by taking the previous amount as a starting base.

Present Value of Customer Data Capitalized: $ 124,995

Interest Rate (Market Value Growth Prospects of next year): 5%

Time: 1 Year

Principal and Interest = 124,995 X (1 + 0,05) = 131,244.75

Revaluation = 131,244.75 - 124,995 = 6,249.75

The further record is the following:

Debit Credit $

Customer Data Capitalized Revaluation 6,249.75

In the end the value recorded into the account Customer Data Capitalized adds up to $ 131,244.75.

The benefit of this "Market" (improperly named) approach is the clear perception of the realistic value of Customer Data; that is more evident when the the prospects of the market are negative and the interest rate could have a minus sign, yielding as a result a decrease in the final balance of Customer Data Capitalized and corrisponding to an amount be recorded as a Devaluation.

For instance, let's suppose that the prospects consist of a 5 % decrease in the Market Value.

Here is the new Accounting Entry in our example.

Debit Credit $

Devaluation Customer Data Capitalized 6,249.75

The final value of Customer Data Capitalized is $ 118,745.25

The application of the Revaluation approach affects of course also the ROI calculation that is even more different from the one achieved by considering the Customer Data as they are treated with the traditonal accounting standards.

In this regards, be aware that the account Revaluation (or Devaluation) doesn't go into the Operating Income and as a result into the numerator of ROI.

In conclusion, the digital assets and customer data are nowadays such a great value base businesses can rely on that even the accounting methods cannot neglect them and keep on considering them in the traditional way.

In this article we have tried to prove these concepts and to give them a practical solution that may help the business managers to a have a better decision-making support.

Suggestions, requests for clarifications, opinions are welcome on Page Contacts, as always.

Carlo Attademo

58. How to link the Capital-Investment Decisions to the Strategy

The capital-budgeting projects are evaluated mainly in their financial aspect and that causes us to choose amongst the most suitable method we know: those which make use of the Cash Flows such as the Net Present Value, Internal Rate of Return, Payback Period and those that look at at ROI and other financial indicators.

Most times the business' top managers take the adherence of the the capital-investment projects for granted, based on their feelings and experience, so that people charged to measure the numerical advantage of one project compared to the ohers worry just about the financial aspect.

As a matter of fact it isn't always like this and it should be very advisable to make use of multicriteria techniques that help managers assess different projects under differerent points of view.

More importantly these methods would allow to assess the compatibility with the business' strategy and, if you would go deeper, even the insertion of the projects selected into one or more of the perspectives of the Balanced Scorecard in order to monitor the execution of the strategy over time.

We all know the BSC breaks down usually into four categories known as Financial, Learning and Growth, Customer and Internal Processes areas; the way you can insert and use the projects chosen as a metric there is not the object of our dissertation that focuses instead on how to evaluate the capital-investment decisions under aspects of different nature.

Nevertheless the purpose of this dissertation is one these multicriteria techniques; we are going to focus on the most useful one.

The AHP, Analytic Hierarchy Process, whose purpose is quantifying all of your evaluation criteria, both tangible and intangible ones and whose main charateristic is to make a pairwise comparison of the criteria

AHP makes you assign a numeric value to the evaluation criteria to "weigh" their relative importance. The higher the number assigned to one criterion the higher its importance.

In the end you may see to which extent each project is in line with your strategic goal.

Let's go on step by step to analyse this method and its usefulness

What are the main phases of AHP?

1. Define the main goals of the business that can be named as criteria to be considered for the decision making in the choice of the project.

For instance, we spot these three criteria: Cash Flow creation, Share Growth in the market segment, Higher Quality of the Products.

2. Assess the relative weight of each decision criterion.

It consists of designing a pairwise comparison matrix where comparing criteria in a column on the left to criteria on the top.

After that you may enter a number into each cell whose function is to quantify the dominance of one element compared to another.

If there are many people taking part in this evaluation process you should resort to a way to express their judgment.

3. Calculate the weights of the criteria.

Divide each value by the column's total value and than sum the values in each cell contained in the respective row.

At the end calculate the average of each criterion to assign it a weight that you can compare.

In this way you will have achieved a hierarchy of priorities/criterion. The higher score corresponds to the option the most in line with your goal.

4. Check out the consistency of the judgments.

If, for instance, about the matrixes a participant states Cash Flow creation is more important than Higher Quality of the Products, theother criterion (Share Growth in the market segment) must be consistent with that judgment.

Let's make an example:

Cash Flow creation = 4 x Higher Quality of the Products

Higher Quality of the Products =1/4 x Share Growth in the market segment

That means that "Share Growth in the market segment" should have the same value as the "Cash Flow creation" and have 1 in the cell of the matrix when compared to it. If this doesn't happen, the data is inconsistent and the calculations must be made again before interpreting the matrix.

5. Analysing the projects in competition and compare them with each other with reference to the criteria by following the same "rules".

6. Obtain the overall priority score of each project.

How?

As I repeat often the best way to make use of when explaining a concept consists of making an example.

Here it is:

- Let's start from the goals described earlier

-

Cash Flow creation

-

Share Growth in the market segment

-

Higher Quality of the Products.

- Then you can assess the relative weight of each criterion by assigning a score in the following matrix.

Table 1

| Cash Flow creation | Share Growth in M.S. | Higher Quality of Product | |

|---|---|---|---|

| Cash Flow creation | 1 | 1/3 | 2 |

|

Share Growth in M.S. |

3 | 1 | 1/4 |

| Higher Quality of Product | 1/2 | 4 | 1 |

| Total | 4.5 | 5.33 | 3.25 |

- Divide each value by the column's value.

Table 2

| Cash Flow creation | Share Growth in M.S. | Higher Quality of Product | |

|---|---|---|---|

| Cash Flow creation | 0.22 | 0.06 | 0.62 |

| Share Growth in M.S. | 0.67 | 0.19 | 0.08 |

| Higher Quality of Product | 0.11 | 0.8 | 0.31 |

- Calculate the average of each criterion to assign it a weight that you can compare.

Table 3

| Average | Percent Weight | |

|---|---|---|

| Cash Flow creation | (0.22+0.06+0.62)/3 | 0.3 (30%) |

| Share Growth in M.S. | (0.67+0.19+0.08)/3 | 0.313 (31.3%) |

| Higher Quality of Product | (0.1+0.8+0.31)/3 | 0.403 (40,3%) |

We can see from these values that the Higher Quality of Product is the most important criterion since its weight adds up to 40,3%.

As you may have noticed each of the three criteria belongs to a different area concerning the perspectives of the Balanced Scorecard we have seen in the lines above so that the project being selected may be inserted into one of the specific areas (how to do it can be discoverd by writing on page Contacts of this website).

Cash Flows belong to the Financial Area, Share growth to the Customer Perespectives and finally the Higher Quality of Product to Internal Processes.

Don't forget that alle the BSC's areas are connected so that the results of a particular Critical Succes Factor (in our case for instance the Higher Quality of Product) belonging to a specific area affects the results of another CSF belonging to another area (in our case the Share Growth in the Market Segment) that in its turn impacts the ultimate CSF (in our case the Cas Flows) belonging to another area.

Let's turn back to the steps.

- Analysing the projects in competition by comparing them with each other with reference to each of the criteria by following the same "rules" in order to obtain the overall priority score of each project.

a) In our example we can compare the different projects in competion (let's suppose A, B, C) against the Higher Quality of Product, then against Cash Flow creation criterion and finally against Share Growth. The priority score of each project is obtained in the same way we have seen previuosly.

Table 4

| Higher Quality of Product | ||||

|---|---|---|---|---|

| Project A | Project B | Project C | Priority | |

| Project A | 1 | 2 | 1/3 | 0.25 |

| Project B | 1/2 | 1 | 1/3 | 0.16 |

| Project C | 3 | 3 | 1 | 0.59 |

| Total | 4.5 | 6 | 1.67 | 1 |

Table 5

| Cash Flow Creation | ||||

| Project A | Project B | Project C | Priority | |

| Project A | 1 | 1/3 | 4 | 0.35 |

| Project B | 3 | 1 | 1/3 | 0.33 |

| Project C | 1/4 | 3 | 1 | 0.31 |

| Total | 4.25 | 4.33 | 5.33 | 0.99 |

Table 6

| Share Growth in the M. S. | ||||

| Project A | Project B | Project C | Priority | |

| Project A | 1 | 3 | 1/2 | 0.36 |

| Project B | 1/3 | 1 | 5 | 0.37 |

| Project C | 2 | 1/5 | 1 | 0,27 |

| Total | 3.33 | 4.2 | 6.5 | 1 |

b) Multiply the score of each project with reference to each criterion by the weighted priority of that criterion.

Project A

Project A = Weighted score of the Project A for the "Higher Quality of Product" by the Weighted score of "Higher Quality of Product" = 0.20 x 40.3 = 8.06

Project A = Weighted score of the Project A for the "Cash Flow Creation" by the Weighted score of "Cash Flow Creation" = 0.35 x 30 = 10.5

Project A = Weighted score of the Project A for the "Share Growth in the M. S." by the Weighted score of "Share Growth in the M. S." = 0.36 x 31.3 = 11.27

Project B

Project B = Weighted score of the Project B for the "Higher Quality of Product" by the Weighted score of "Higher Quality of Product" = 0.16 x 40.3 = 6.45

Project B = Weighted score of the Project B for the "Cash Flow Creation" by the Weighted score of "Cash Flow Creation" = 0.33 x 30 = 9.9

Project B = Weighted score of the Project B for the "Share Growth in the M. S." by the Weighted score of "Share Growth in the M. S." = 0.37 x 31.3 = 11.58

Project C

Project C = Weighted score of the Project C for the "Higher Quality of Product" by the Weighted score of "Higher Quality of Product" = 0.59 x 40.3 = 23,77

Project C = Weighted score of the Project C for the "Cash Flow Creation" by the Weighted score of "Cash Flow Creation" = 0.31 x 30 = 9.3

Project C = Weighted score of the Project C for the "Share Growth in the M. S." by the Weighted score of "Share Growth in the M. S." = 0.77 x 31.3 = 8.45

c) Get the overall priority of each project by summing up all the new weighted criteria scores and rank the projects.

Project A's Overall Priority Score = 8.06+10.5+11,27 = 29,83

Project B's Overall Priority Score = 6.45+9.9+11.58 = 27,93

Project C's Overall Priority Score = 23.77+9.3+8.45 = 41,52